Methods for Visual Odometry

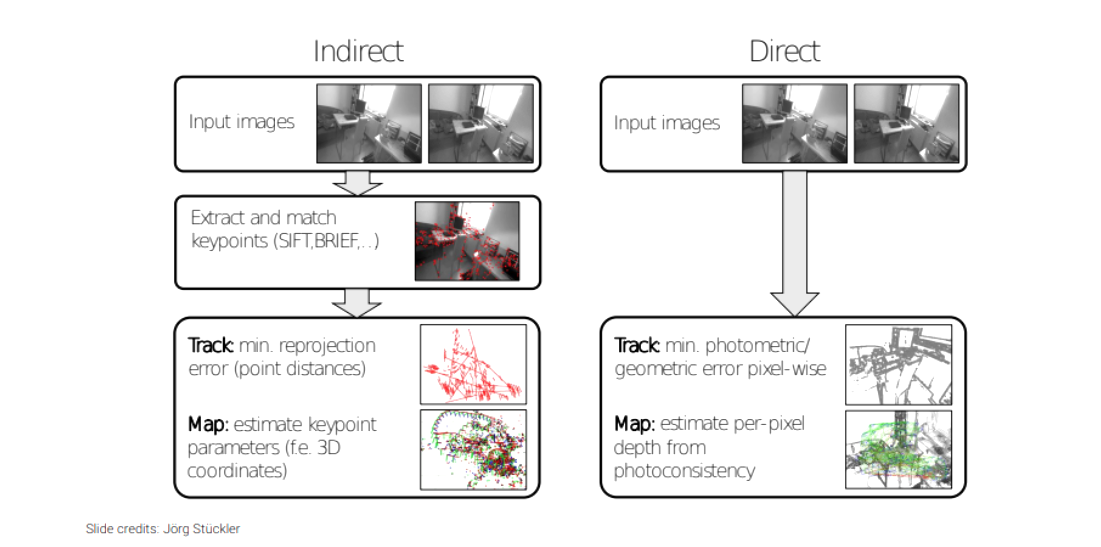

Direct Methods

Direct methods of visual odometry work by directly using pixel intensity values from images to estimate motion. This approach does not explicitly extract features from the images. Instead, it utilizes the raw image data to compute the motion between frames.

In direct methods of visual odometry, pixel intensity matching is used to estimate motion by comparing pixel intensities between successive images. This approach is particularly robust in low-texture environments where extracting features might be challenging, although it can be sensitive to lighting changes and might require more computational resources. An example of this method is the Semi-direct Visual Odometry (SVO), which uniquely combines feature-based and direct approaches.

Indirect Methods

Indirect methods, also known as feature-based methods, involve detecting and matching key features between different images. These features could be points, edges, or other distinct elements in the environment.

Indirect methods, or feature-based methods, involve extracting distinct features like points or edges from images, and then matching these features across different frames to estimate motion. This approach tends to be more robust against changes in lighting and is generally more efficient, but it might face challenges in environments with few distinct features or in highly dynamic scenes. ORB-SLAM, which utilizes Oriented FAST and Rotated BRIEF features, is a widely-used example of an indirect method.

Hybrid Methods

In addition to purely direct or indirect approaches, there are hybrid methods that combine elements of both. These methods aim to leverage the strengths of each approach to improve accuracy and robustness.

Hybrid methods in visual odometry, such as Semi-direct Visual Odometry (SVO), blend the advantages of both feature extraction and pixel intensity analysis. This combination allows for accurate feature matching and enhanced motion estimation by directly using pixel data. SVO is a prime example of such a hybrid approach, offering a sophisticated balance of direct and indirect visual odometry techniques. For more insights into SVO, visit Semi-direct Visual Odometry (SVO) at the University of Zurich.